artificial inspiration

a project dedicated to enhancing human creativity through artificial intelligence

Artificial Inspiration

Artificial Inspiration

How will it be possible to stimulate and increase human creativity related to visual design by using resources from the field of artificial intelligence to overcome the predictable and achieve innovative, creative results? „Artificial Inspiration“ attempts to find a solution to this.

Let me know your opinion or idea on this.Introduction

Will it be possible to break collective thought patterns through targeted, non-human stimuli and create a new level of creativity in the interaction between humans and artificial intelligence? How can we make use of the significant advances in the field of AI in recent years to build the creative process of the future in an enriching way?

The possibility of mathematically representing complex systems such as language or painting styles enables a completely new approach to a variety of topics. Connections in large amounts of data can be detected and rules can be extracted from them. Based on these rules, data can be combined with each other, resulting in outcomes that exceed what is humanly possible.

On this basis, the creative process was analyzed and compared with the possibilities of AI. The goal is to generate impulses from the linking of various data and to show new perspectives on existing problems, which inspire people to find new solutions: Artificial Inspiration.

For this purpose, a theoretical process for increasing creativity with the help of AI was developed, which essentially consists of two steps:

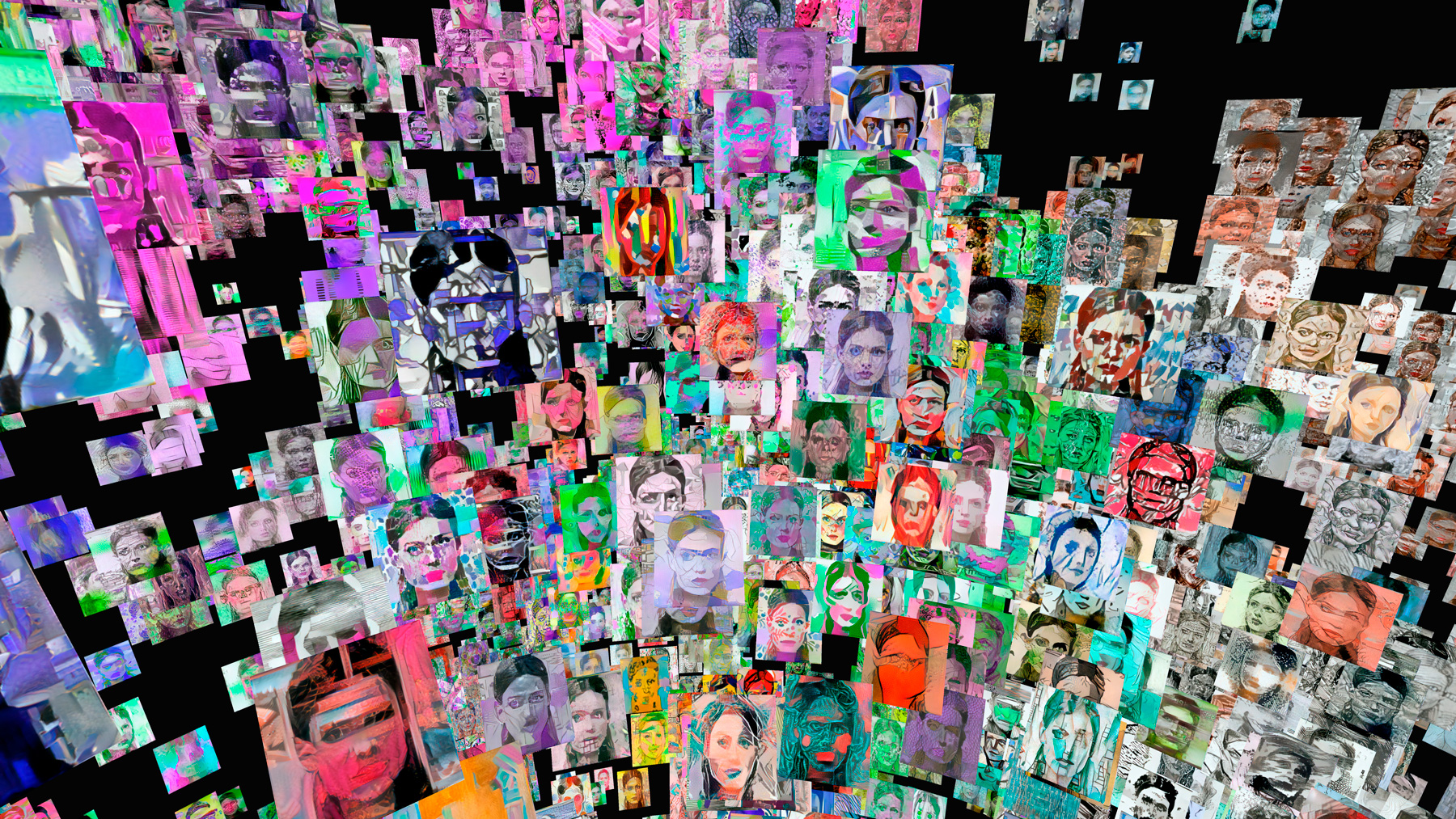

1. Generate as many different variations of one portrait as possible.

2. Assisting in identifying the drafts that are found to be personally inspiring by an individual.

This theoretical process was then exemplified in the project using a specific practical design task: Finding new and creative ways to represent a portrait.

1. Generation of variations

In the first step, new types of portrait variations are to be generated. For this purpose, an initial portrait was first generated with StyleGAN (Karras et al., 2018) using the trained „CelebA-HQ“ model (Karras et al., 2019; Seed: 2391):

In the next step, different algorithms and models were combined in a specific way to manipulate the generated portrait and create new forms of representation. At this point, of course, the combination of models can be extended and rearranged as desired. So there is the possibility of more and more variations.

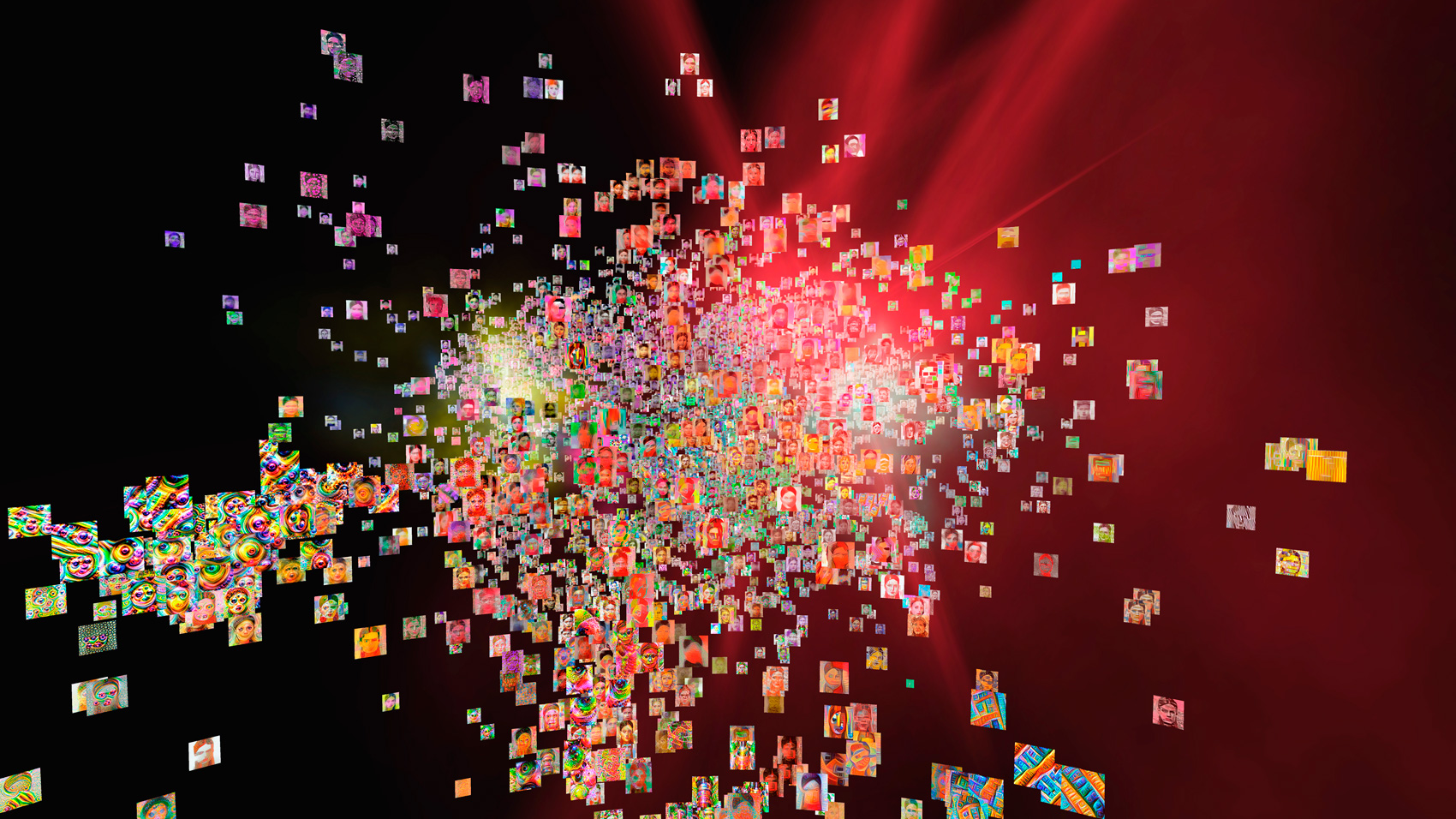

In this way, over 10000 portrait variants were generated in this example.

In addition, an artificial intelligence model (StyleGAN2-ADA) was trained with the resulting image data set, creating a model that attempts to combine and replicate all of the resulting styles.

2. Identify inspiring results

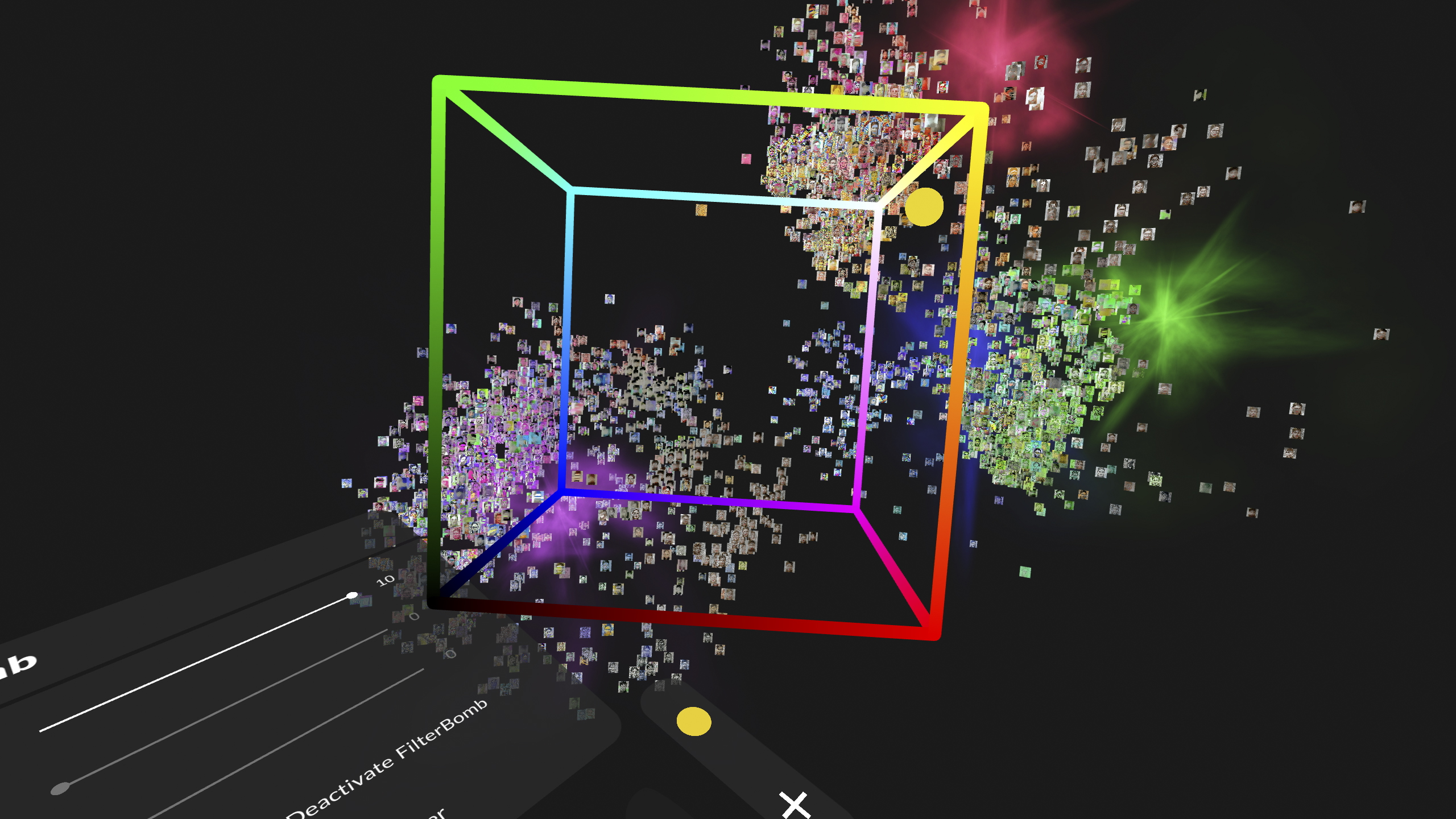

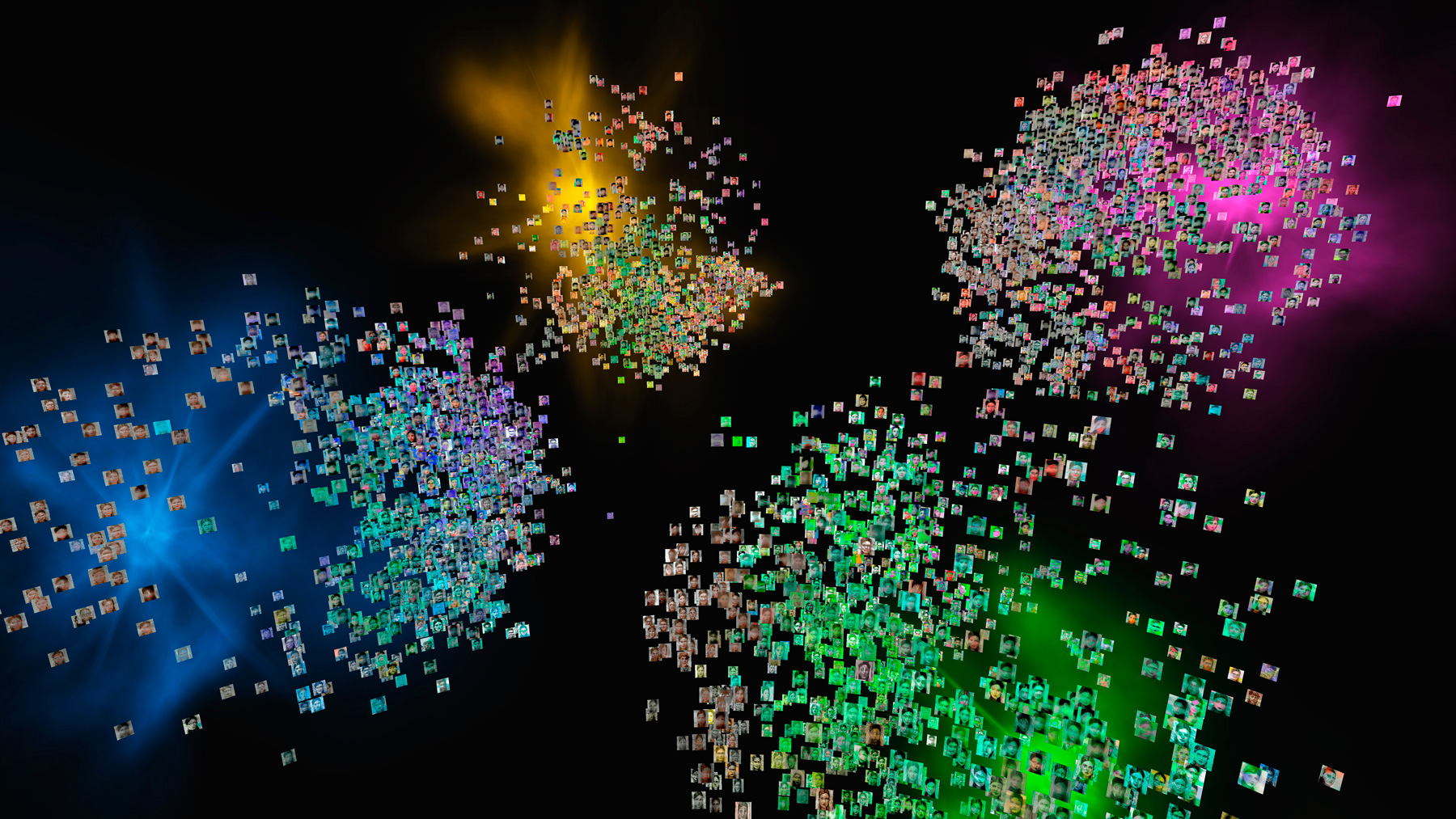

In the second instance of the designed process, the images, which have a personal inspiring effect on an individual user, must be found from the multitude of results. To make this possible, all generated images are classified according to various criteria:

- General visual similarity (using Img2Vec – img2vec-keras by Jared Winick)

- Style of the image (using Img2Vec – img2vec-keras by Jared Winick)

- Color scheme (using KMeans)

- Degree of abstractness (using Face Recognition)

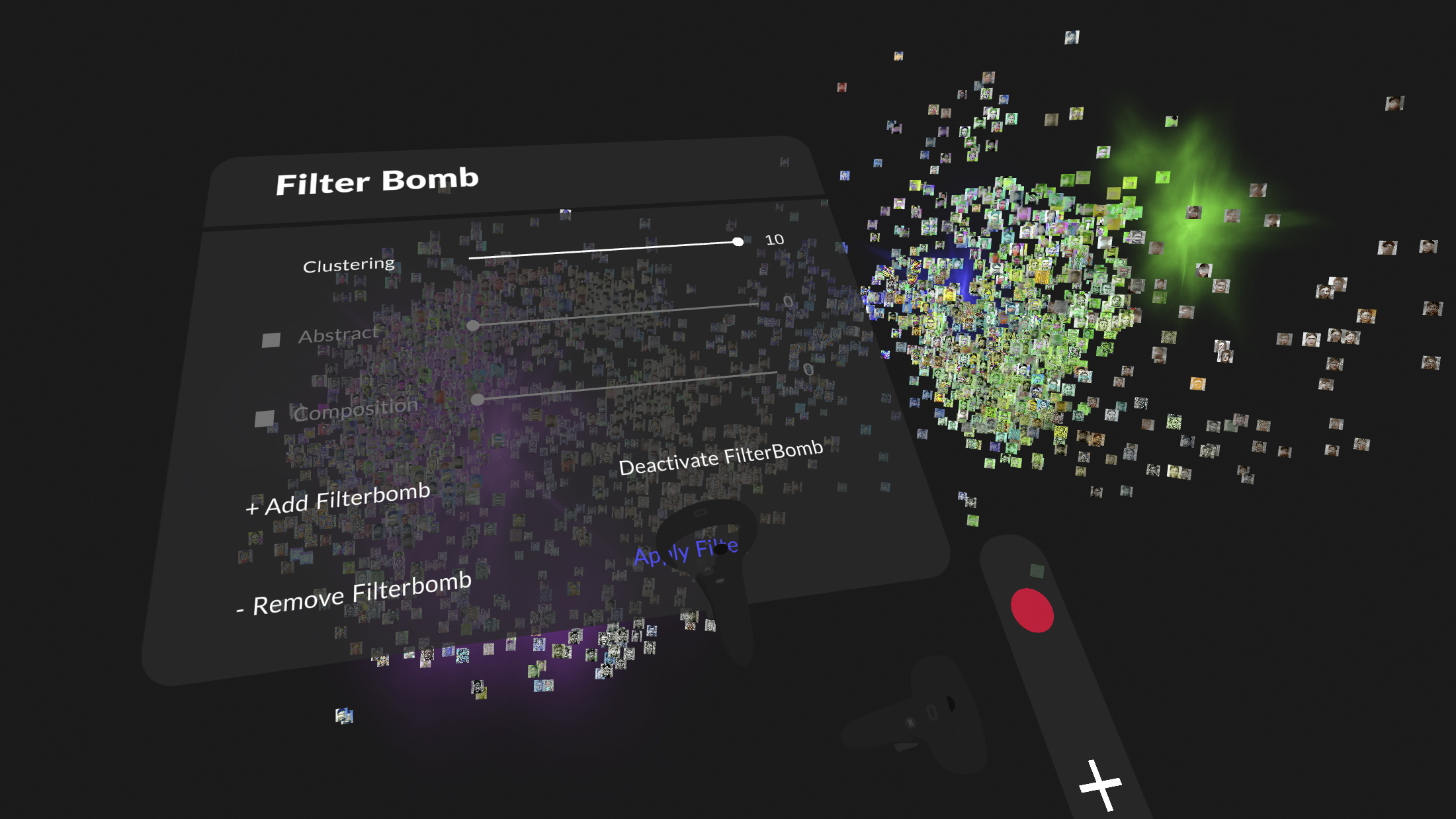

Based on this analysis, a three-dimensional vector is now assigned to each image. Subsequently, a virtual reality application was developed that allows navigation through the three-dimensional space of images. In this application, the images can also be filtered according to the criteria already mentioned and clustered with the help of „filter bombs“. This makes it possible to explore different perspectives on the subject of portraits, store inspiring approaches, compare them and develop new ideas from them.

References

Karras, T., Aila, T., Laine, S., Lehtinen, J. (2018): Progressive Growing of GANs for Improved Quality, Stability, and Variation.

https://arxiv.org/pdf/1710.10196.pdf

(Retrieved: 02.01.2021)

Karras, T., Laine, S., Aila, T. (2019): A Style-Based Generator Architecture for Generative Adversarial Networks.

https://arxiv.org/pdf/1812.04948.pdf

(Retrieved: 31.12.2020)

Winick, J., (2019): img2vec-keras.

https://github.com/jaredwinick/img2vec-keras

(Zugriff: 04.11.2020)

Datasets used in this project

Bryan, B. (2020): Abstract Art Gallery. Version 10.

https://www.kaggle.com/bryanb/abstract-art-gallery/version/10

(Retrieved: 10.11.2020)

Kaggle (2016): Painter By Numbers.

https://www.kaggle.com/c/painter-by-numbers/data

(Retrieved: 11.12.2020)

Karras T., Aittala, M., Hellsten, J., Laine, S., Lehtinen, J., Aila, T. (2020): Met- Faces. Version 1.

https://github.com/NVlabs/metfaces-dataset

(Retrieved: 03.01.2021)

Sage, A., Agustsson, E.,Timofte, R., Van Gool, L. (2017): LLD – Large Logo Dataset. Version 0.1.

https://data.vision.ee.ethz.ch/cvl/lld

(Retrieved: 24.10.2020)

Surma, G. (2019): Abstract Art Images. Version 1.

https://www.kaggle.com/greg115/abstract-art/version/1

(Retrieved: 10.11.2020)

Code and Notebooks used in this project

Pinkney, J. (2020): Network blending in StyleGAN.

https://colab.research.google.com/drive/1tputbmA9EaXs9HL9iO21g7xN7jz_Xrko?usp=sharing

(Retrieved: 26.10.2020)

Schultz, D.: DeepDreaming with TensorFlow.

https://github.com/dvschultz/ml-art-colabs/blob/master/deepdream.ipynb

(Retrieved: 22.11.2020)

Schultz, D. (2019): SinGAN.

https://github.com/dvschultz/ai/blob/master/SinGAN.ipynb

(Retrieved: 02.11.2020)

Schultz, D. (2020): Neural Style TF.

https://github.com/dvschultz/ai/blob/master/neural_style_tf.ipynb

(Retrieved: 26.10.2020)

Schultz, D. (2020): StyleGAN2.

https://github.com/dvschultz/ai/blob/master/StyleGAN2.ipynb

(Retrieved: 21.10.2020)